Google Translate on Smart Glasses: The Dawn of a Seamless Conversational Future

The landscape of communication is poised for a radical transformation, and at the heart of this evolution lies Google’s burgeoning integration of its Live Translate feature into the burgeoning world of smart glasses. While the current iterations of wearable technology offer a glimpse into a hands-free future, it is the promise of instantaneous, contextually aware language translation that truly elevates these devices from novelties to indispensable tools. We, at Magisk Modules, believe that Google’s strategic pivot towards making Translate the killer app for smart glasses is not merely an incremental improvement, but a seismic shift that will redefine how we interact with the world and each other. This comprehensive exploration delves into the profound implications, technical underpinnings, and user-centric benefits of this monumental development, positioning it as the definitive advancement in cross-cultural communication.

The Evolution of Translation: From Text to Real-Time Auditory Immersion

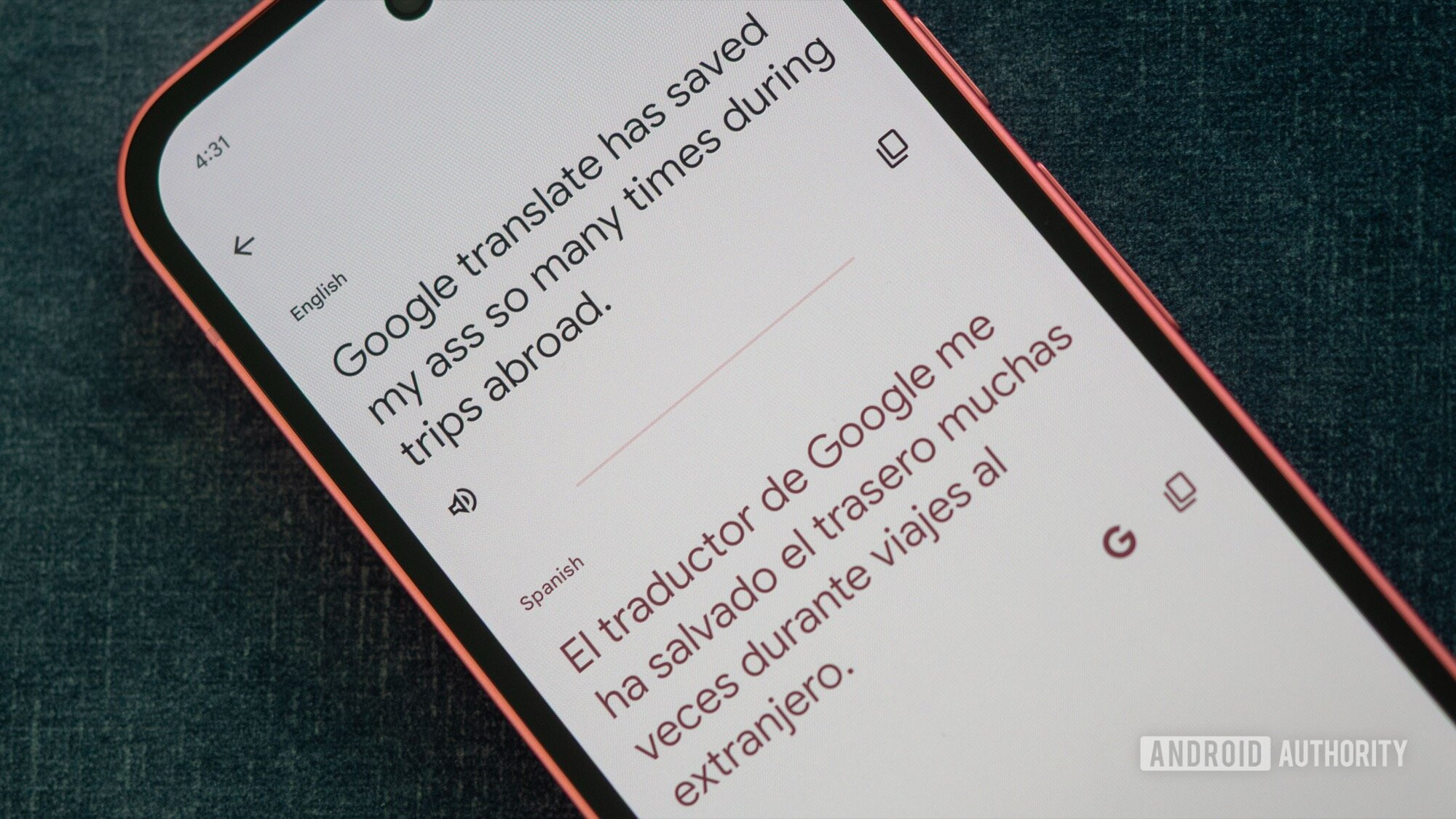

For decades, digital translation has been a journey of approximations. Early tools relied on rigid dictionaries and basic grammar rules, leading to stilted and often nonsensical outputs. The advent of statistical machine translation and, more recently, neural machine translation (NMT) represented significant leaps forward, enabling more fluid and contextually relevant translations. However, these advancements were largely confined to screens – smartphones, computers, and dedicated translation devices. The inherent friction of pulling out a device, initiating an app, and then deciphering the translated text on a small display created a significant barrier to truly natural communication.

Smart glasses, with their potential for unobtrusive, always-on connectivity, offer the perfect platform to overcome this barrier. Google’s vision of integrating Live Translate directly into the visual and auditory streams of these devices promises to eliminate this friction entirely. Imagine engaging in a conversation with someone speaking a different language, and instead of fumbling with your phone, you hear their words translated directly into your ear, or see subtitles appear discreetly within your field of vision. This is the future Google is meticulously crafting, and its implications are vast and far reaching.

Beyond the Screen: Redefining Conversational Fluidity

The core of Google’s strategy lies in its commitment to achieving real-time, seamless translation that feels as natural as speaking your native tongue. This is not about simply displaying text; it’s about fostering genuine understanding and connection. The ability to receive translated audio directly through the integrated speakers or bone conduction technology of smart glasses means that conversations can flow uninterrupted. There’s no need to break eye contact, no awkward pauses while you check a translation. This auditory immersion is crucial for building rapport and fostering authentic human connection, breaking down the invisible walls that language differences often erect.

Furthermore, the integration of visual translation, whether as augmented reality subtitles overlaid on the speaker’s face or as contextual information appearing in your periphery, adds another layer of comprehension. This multi-modal approach caters to different learning styles and preferences, ensuring that the translation is not only accurate but also easily digestible. For individuals who are hard of hearing or in noisy environments, the visual cues provided by smart glasses become invaluable, offering a vital communication lifeline.

The Technological Underpinnings: Powering a Universal Translator

Achieving this level of seamless translation on smart glasses is a monumental technological undertaking, requiring sophisticated advancements across several key areas:

Advanced Neural Machine Translation (NMT) Architectures

At the core of Google Translate are its cutting-edge NMT models. These models, trained on colossal datasets of multilingual text and speech, are constantly being refined to understand nuance, idiom, and context with increasing accuracy. For smart glasses, these models need to be optimized for on-device processing or low-latency cloud-based translation. This means not only achieving high accuracy but also doing so with minimal delay, a critical factor for real-time communication. Google’s expertise in areas like transformer networks and attention mechanisms is paramount in developing NMT models that can handle the complexities of spoken language in real time.

On-Device vs. Cloud-Based Translation: A Hybrid Approach

The debate between on-device and cloud-based processing is crucial for smart glasses. On-device processing offers the ultimate in privacy and independence, as translations are handled locally without sending data externally. However, it requires significant processing power and battery life, often limiting the complexity of the models that can be deployed. Cloud-based translation, while requiring a constant internet connection, allows for more powerful and complex NMT models to be utilized, leading to higher accuracy and broader language support.

Google’s strategy likely involves a hybrid approach. Basic translation tasks, such as common phrases or frequently used languages, could be handled on-device for speed and privacy. More complex or less common language pairs could then be routed to Google’s powerful cloud infrastructure, ensuring a robust and comprehensive translation experience. This intelligent routing of translation tasks will be key to balancing performance, accuracy, and resource consumption on the power-constrained hardware of smart glasses.

Speech Recognition and Natural Language Understanding (NLU)

Accurate translation begins with accurate recognition of spoken words. Smart glasses will rely on highly advanced speech recognition systems capable of distinguishing between different speakers, filtering out background noise, and understanding various accents and dialects. Beyond just transcribing words, the device needs to employ Natural Language Understanding (NLU) to grasp the intent and meaning behind the spoken phrases. This involves identifying entities, understanding sentiment, and disambiguating homophones and ambiguous sentence structures. Google’s extensive research in both speech recognition and NLU is a significant advantage in this domain.

Speech Synthesis and Auditory Output

Once translated, the output needs to be delivered in a natural and understandable way. High-quality speech synthesis is essential to ensure that the translated audio sounds human-like, with appropriate intonation and rhythm. This avoids the robotic and unnatural speech that plagued earlier translation systems. The integration of customizable voice options and even the ability to mimic aspects of the original speaker’s tone could further enhance the user experience, making the translated voice feel more personal and less jarring.

Contextual Awareness and Environmental Sensing

The true power of Live Translate on smart glasses will lie in its ability to leverage contextual information. This means not just translating words, but understanding the situation. Environmental sensing capabilities of the smart glasses, such as microphones for ambient noise levels, accelerometers for motion detection, and potentially even cameras for visual context, can provide valuable clues. For example, if the glasses detect that you are in a bustling market, the speech recognition system can be tuned to better isolate the speaker’s voice. If the conversation involves a specific object or landmark, the system might be able to offer visual aids or contextual information. This situational awareness elevates translation from a simple word-for-word conversion to a genuinely intelligent communication aid.

User-Centric Benefits: Empowering a Connected World

The widespread adoption of smart glasses powered by Google Translate will unlock a plethora of user-centric benefits, fundamentally altering how we navigate the globe and interact with diverse communities.

Breaking Down Language Barriers in Travel and Tourism

For travelers, the world will suddenly become significantly more accessible. Navigating foreign cities, ordering food in local restaurants, engaging with shopkeepers, and understanding public announcements will all become effortless. Imagine exploring the bustling souks of Marrakech, conversing with vendors in their native tongue, or visiting ancient ruins in Rome and having historical context translated for you in real-time. This immersive travel experience removes the anxiety and limitations often associated with language barriers, allowing for deeper cultural immersion and more authentic interactions. Travelers will no longer be passive observers but active participants in the local fabric.

Enhancing Business Communication and Global Collaboration

In the increasingly globalized business world, effective cross-border communication is paramount. Smart glasses with Live Translate will revolutionize international business meetings, conferences, and negotiations. Teams spread across continents will be able to collaborate seamlessly, understanding each other perfectly without the need for human interpreters in every instance. This will reduce costs, increase efficiency, and foster stronger international partnerships. Imagine a sales pitch delivered to a client in Tokyo where the nuances of the presentation are perfectly conveyed, or a technical support call with a vendor in Berlin where the problem is understood and resolved in real-time.

Facilitating Intercultural Understanding and Social Inclusion

Beyond commerce and travel, the impact on social interaction and cultural understanding is profound. Communities with diverse linguistic populations will become more integrated. Individuals will be able to connect with neighbors, participate in local events, and access essential services without the fear of being misunderstood. This fosters greater social inclusion and reduces isolation, particularly for immigrant populations and refugees. Imagine attending a local community meeting and understanding every speaker, or easily assisting someone in need who speaks a different language. This technology has the power to build bridges and create more harmonious societies.

Supporting Education and Lifelong Learning

The educational implications are equally transformative. Students can access learning materials from around the world, attend virtual lectures delivered in different languages, and engage with international peers on collaborative projects. For individuals pursuing lifelong learning, the ability to consume content and engage in discussions across linguistic divides opens up a universe of knowledge. Language learning itself could be revolutionized, with immersive experiences allowing users to practice speaking and understanding in real-time, accelerating the acquisition of new languages.

Assisting Individuals with Hearing Impairments and Communication Challenges

The visual translation capabilities of smart glasses offer a powerful new tool for individuals with hearing impairments. Augmented reality subtitles can provide a constant stream of translated dialogue, allowing them to fully participate in conversations and access auditory information that was previously inaccessible. This enhances accessibility and promotes greater independence for a significant portion of the population. Moreover, for individuals who struggle with verbal communication, the ability to see their own thoughts translated visually could offer a new avenue for expression.

The Future is Now: Google’s Strategic Vision for Smart Glasses

Google’s commitment to making Translate a killer app for smart glasses is a clear indication of its long-term strategy. By focusing on a core, universally valuable functionality, Google aims to drive adoption of its wearable hardware and establish a dominant ecosystem. This approach mirrors the strategy employed with products like Google Search and Gmail, which became indispensable tools by solving fundamental user needs.

The continuous advancements in AI, miniaturization of technology, and the increasing sophistication of wearable devices are all converging to make this vision a reality. While challenges remain, particularly in battery life, processing power, and user interface design, the trajectory is clear. Google is not just developing a feature; it is laying the groundwork for a future where language is no longer a barrier, but a bridge to a more connected, understanding, and collaborative world.

The Road Ahead: Challenges and Opportunities

While the potential is immense, several challenges must be addressed to fully realize the promise of Translate on smart glasses:

- Battery Life and Power Consumption: Running complex AI models and constant audio processing is power-intensive. Efficient power management and advancements in battery technology are crucial.

- Processing Power and Miniaturization: Sophisticated NMT and NLU require significant computational resources, which are limited in compact wearable devices. Further miniaturization and optimization of processing chips are necessary.

- User Interface and Experience: Designing an intuitive and unobtrusive user interface for smart glasses that seamlessly integrates translation without being distracting is a key design challenge.

- Privacy and Security: Handling sensitive conversational data requires robust privacy and security measures to build user trust.

- Accuracy and Nuance: While NMT has advanced significantly, capturing the full nuance, cultural context, and idiomatic expressions of every language remains an ongoing pursuit.

Despite these hurdles, the sheer potential of Google Translate to redefine human interaction on smart glasses is undeniable. This is not just about a translation tool; it’s about unlocking a new era of global communication, fostering unprecedented levels of understanding, and making the world a truly smaller and more interconnected place. The journey from text-based translation to real-time, immersive auditory and visual communication through smart glasses represents a profound leap forward, positioning Google Translate as the indispensable killer app that will define the future of wearable technology. We are on the cusp of a revolution, and Google Translate is leading the charge.